Big Data processing algorithms take center stage in the digital landscape, revolutionizing the way data is managed and analyzed. Dive into this comprehensive guide to explore the intricacies of these algorithms and their vital role in modern data processing.

From understanding the different types of algorithms to delving into optimization techniques and future trends, this guide provides a holistic view of Big Data processing algorithms, shedding light on their significance and impact across various industries.

Overview of Big Data Processing Algorithms

Big data processing algorithms refer to the set of computational methods and techniques used to analyze, manipulate, and extract valuable insights from massive datasets. These algorithms are specifically designed to handle the complexities of processing large volumes of structured, semi-structured, and unstructured data in a timely and efficient manner.

Examples of Industries/Applications

- Finance: Big data processing algorithms are commonly used in financial institutions for fraud detection, risk assessment, and algorithmic trading.

- Healthcare: In the healthcare industry, these algorithms are utilized for patient diagnosis, personalized medicine, and health analytics.

- Retail: Retailers leverage big data processing algorithms for customer segmentation, demand forecasting, and personalized marketing campaigns.

- Telecommunications: Telecom companies apply these algorithms for network optimization, customer churn prediction, and service quality management.

Importance of Efficient Algorithms, Big Data processing algorithms

Efficient big data processing algorithms play a crucial role in handling the immense volume, variety, and velocity of data generated in today’s digital world. By optimizing the processing speed and resource utilization, these algorithms enable organizations to extract actionable insights, make informed decisions, and gain a competitive edge in their respective industries.

Types of Big Data Processing Algorithms

Big data processing algorithms play a crucial role in handling and analyzing large volumes of data efficiently. There are different types of algorithms used for processing big data, each serving a specific purpose based on the nature of the data and the requirements of the task at hand.

Batch Processing Algorithms vs. Real-time Processing Algorithms

Batch processing algorithms and real-time processing algorithms are two primary types of algorithms used for processing big data. Batch processing involves collecting and processing data in large groups or batches, typically at scheduled intervals. This method is suitable for tasks that do not require immediate results and can tolerate some latency. On the other hand, real-time processing algorithms analyze and act on data as soon as it is generated, providing instant insights and responses. Real-time processing is essential for time-sensitive applications such as fraud detection, monitoring systems, and recommendation engines.

- Batch Processing Algorithms: These algorithms are used for processing large volumes of data at once, making them suitable for applications where data can be processed offline or at specific intervals. Examples include MapReduce, Apache Hadoop, and Apache Spark.

- Real-time Processing Algorithms: These algorithms analyze data as it arrives, enabling immediate responses and insights. Examples include Apache Storm, Apache Flink, and Kafka Streams.

Parallel Processing Algorithms

Parallel processing algorithms play a crucial role in handling big data tasks efficiently by dividing the workload among multiple processing units or nodes. This parallelization allows for faster processing speeds and improved scalability, making it ideal for processing massive datasets in a timely manner.

- Distributed Processing: In distributed processing, data is divided into smaller chunks and processed simultaneously on multiple nodes. This approach helps reduce processing time and improves fault tolerance.

- Parallel Computing: Parallel computing algorithms leverage multiple processing units to execute tasks concurrently, speeding up data processing and analysis. Examples include parallel sorting algorithms, parallel search algorithms, and parallel matrix multiplication algorithms.

Challenges in Big Data Processing

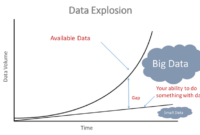

Processing big data comes with its own set of challenges, particularly when using traditional algorithms that may not be equipped to handle the sheer volume, variety, and velocity of data generated in today’s digital world.

Scalability and performance are major concerns when dealing with big data. Traditional algorithms may struggle to scale efficiently as the size of the data increases. This can lead to bottlenecks and slowdowns in processing, affecting overall performance and productivity. Specialized big data algorithms are designed to address these scalability and performance issues by optimizing processing techniques for large datasets.

The impact of data variety, velocity, and volume cannot be understated when it comes to selecting and implementing algorithms for big data processing. The sheer volume of data being generated every day requires algorithms that can handle the massive influx of information in real-time. Data variety, including structured, semi-structured, and unstructured data, further complicates the processing task, as traditional algorithms may not be able to effectively analyze and extract insights from diverse data sources.

In conclusion, the challenges associated with processing big data using traditional algorithms highlight the need for specialized big data algorithms that can scale efficiently, optimize performance, and effectively handle the variety, velocity, and volume of data generated in today’s digital landscape.

Optimization Techniques for Big Data Algorithms

Optimization techniques play a crucial role in improving the performance of big data processing algorithms. By utilizing various methods, it is possible to enhance algorithm efficiency and achieve better results in handling large volumes of data.

Data Preprocessing and Feature Engineering

Data preprocessing involves cleaning, transforming, and organizing raw data before feeding it into the algorithm. This step helps in removing noise, handling missing values, and standardizing data formats, which ultimately leads to more accurate results. Feature engineering focuses on selecting, creating, or transforming features to improve model performance. By identifying relevant features and eliminating irrelevant ones, the algorithm can work more efficiently and produce better outcomes.

Utilizing Distributed Computing Frameworks

Distributed computing frameworks like Hadoop and Spark are essential for optimizing algorithm execution in big data processing. These frameworks allow for parallel processing of data across multiple nodes, enabling faster computation and improved scalability. By distributing the workload efficiently, algorithms can leverage the resources of a cluster to handle large datasets effectively.

Future Trends in Big Data Processing Algorithms

The future of big data processing algorithms is set to be shaped by emerging technologies such as machine learning and artificial intelligence (AI). These advanced technologies are expected to play a crucial role in the development of new algorithms that can handle the increasing volume, velocity, and variety of data in the big data landscape.

Machine Learning and AI Integration

Machine learning and AI algorithms are becoming increasingly integrated into big data processing systems to improve efficiency and accuracy. These technologies enable algorithms to learn from data patterns, adapt to changing environments, and make predictions based on historical data. As machine learning and AI continue to evolve, we can expect to see more sophisticated algorithms that can process data faster, detect anomalies more effectively, and provide more accurate insights.

- Machine learning algorithms can automate data processing tasks, such as data cleaning, transformation, and analysis, reducing the need for manual intervention and speeding up the overall process.

- AI algorithms can enhance decision-making processes by identifying complex patterns in data, predicting future trends, and optimizing algorithms for better performance.

- The integration of machine learning and AI into big data processing algorithms can lead to more intelligent and adaptive systems that can continuously improve and optimize their performance over time.

Impact of Quantum Computing

Another significant trend in the future of big data processing algorithms is the potential impact of quantum computing. Quantum computing has the ability to revolutionize data processing by performing computations at speeds that are exponentially faster than traditional computers. This could lead to the development of quantum algorithms that can handle massive amounts of data with unprecedented efficiency.

Quantum computing has the potential to solve complex big data processing problems that are currently beyond the capabilities of classical computers, opening up new possibilities for data analysis and optimization.

- Quantum algorithms can potentially solve optimization problems, such as clustering, classification, and regression, in a fraction of the time required by classical algorithms, leading to significant advancements in big data processing.

- The integration of quantum computing with big data processing algorithms could result in more scalable, efficient, and secure data processing systems that can handle the challenges posed by the ever-increasing volume and complexity of data.

In conclusion, Big Data processing algorithms stand as the cornerstone of effective data management, offering unparalleled insights and solutions to complex data challenges. As technology continues to advance, the evolution of these algorithms promises a future where data processing reaches new heights of efficiency and innovation.

When it comes to managing and analyzing vast amounts of data, businesses often turn to data warehouse technology. This technology allows for the storage and retrieval of data from multiple sources in a centralized location, making it easier to access and analyze. In addition, companies are increasingly utilizing distributed data storage solutions to handle the growing volume of information.

One popular system used for distributed data storage is the Hadoop Distributed File System (HDFS) , which is designed to store and manage large datasets across clusters of computers.

When it comes to managing large volumes of data, businesses often rely on data warehouse technology to store and organize information efficiently. This technology enables companies to analyze and extract valuable insights from their data, helping them make informed decisions.

For organizations dealing with massive amounts of data, utilizing distributed data storage systems can be beneficial. This approach involves storing data across multiple servers or locations, improving performance, scalability, and fault tolerance.

One of the key components of the Hadoop ecosystem is the Hadoop Distributed File System (HDFS) , which is designed to store and manage large datasets across distributed computing clusters. HDFS offers high availability and reliability, making it a popular choice for big data processing.