Big Data processing with AI sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. In today’s data-driven world, the fusion of Big Data processing with AI technologies has opened up new horizons for businesses and industries alike.

As we delve deeper into the realm of Big Data processing with AI, we uncover the transformative impact of artificial intelligence on analyzing and harnessing vast amounts of data. From overcoming challenges to unlocking new opportunities, this dynamic duo is reshaping the way we perceive and leverage data in unprecedented ways.

Overview of Big Data Processing with AI

Big data processing with artificial intelligence (AI) involves the use of advanced algorithms and machine learning techniques to analyze and extract valuable insights from large and complex data sets. AI has revolutionized the way big data is handled, making it possible to identify patterns, trends, and correlations that were previously difficult or impossible to detect.

Impact of AI on Big Data Processing

AI has had a significant impact on various industries and applications, enhancing decision-making processes and driving innovation. Some examples include:

- Healthcare: AI is used to analyze large volumes of medical data to improve diagnosis accuracy, predict patient outcomes, and personalize treatment plans.

- Finance: AI algorithms are employed to detect fraudulent activities, optimize trading strategies, and provide personalized financial recommendations to customers.

- Retail: AI-powered analytics help retailers understand customer preferences, optimize pricing strategies, and forecast demand to improve inventory management.

- Manufacturing: AI is utilized for predictive maintenance, quality control, and supply chain optimization, leading to increased efficiency and reduced downtime.

Challenges in Big Data Processing: Big Data Processing With AI

Processing large volumes of data comes with its own set of challenges that organizations need to overcome to derive meaningful insights. These challenges include issues related to storage, processing speed, data quality, and scalability.

Storage Challenges

Storing massive amounts of data can be a major challenge for organizations. Traditional storage solutions may not be equipped to handle the volume of data generated daily. This can lead to increased costs and inefficiencies in data management.

Processing Speed

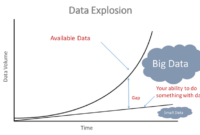

Processing big data in a timely manner is crucial for extracting valuable insights. Slow processing speeds can hinder real-time decision-making and analysis. This challenge becomes even more critical as the volume of data continues to grow exponentially.

Data Quality

Ensuring the quality of data is another challenge in big data processing. With large volumes of data coming from various sources, organizations often struggle with data accuracy, completeness, and consistency. Poor data quality can lead to incorrect insights and decisions.

Scalability

As data volumes increase, organizations need scalable solutions that can handle growing data requirements. Traditional processing systems may not be able to scale effectively to meet the demands of big data processing. Scalability challenges can impact performance and hinder the ability to process data efficiently.

AI Solutions for Big Data Challenges, Big Data processing with AI

Artificial Intelligence (AI) technologies offer innovative solutions to address the challenges faced in big data processing. AI-powered tools can automate data processing tasks, improve data quality through intelligent algorithms, enhance processing speed with parallel processing capabilities, and provide scalable solutions for handling large volumes of data.

Real-World Examples

– Netflix uses AI algorithms to process vast amounts of viewer data to recommend personalized content to its users, improving user experience and engagement.

– Amazon leverages AI for real-time data processing to optimize product recommendations based on user behavior and preferences, leading to increased sales and customer satisfaction.

– Healthcare organizations utilize AI-powered systems to process medical data and identify patterns for early disease detection, improving patient outcomes and reducing healthcare costs.

Tools and Technologies for Big Data Processing with AI

When it comes to processing big data with AI, there are several popular tools and technologies that are commonly used in the industry. These tools help enhance efficiency and accuracy in data processing tasks by leveraging artificial intelligence algorithms.

Popular Tools for Big Data Processing

- Hadoop: An open-source framework that allows for the distributed processing of large data sets across clusters of computers.

- Spark: Another open-source framework that provides in-memory data processing for big data analytics.

- TensorFlow: An open-source machine learning framework developed by Google for building and training neural networks.

- PyTorch: Another popular open-source machine learning library that is widely used for deep learning tasks.

Common AI Algorithms for Big Data Processing

- Decision Trees: A popular algorithm used for classification and regression tasks in big data processing.

- Random Forest: An ensemble learning method that combines multiple decision trees for improved accuracy.

- Support Vector Machines (SVM): A supervised learning algorithm used for classification and regression tasks.

- Neural Networks: Deep learning algorithms inspired by the human brain, capable of learning complex patterns in data.

Enhanced Efficiency and Accuracy

Using these tools and algorithms in conjunction with AI techniques allows for faster processing of large data sets while maintaining high accuracy levels. The parallel processing capabilities of frameworks like Hadoop and Spark enable the efficient handling of massive amounts of data, leading to quicker insights and decision-making. Additionally, the advanced learning capabilities of AI algorithms such as neural networks and SVM contribute to enhanced accuracy in data processing tasks, ultimately improving the overall performance of big data processing with AI.

Impact of Big Data Processing with AI on Businesses

Businesses are increasingly leveraging big data processing combined with AI to gain competitive advantages in today’s fast-paced market environment. By harnessing the power of artificial intelligence to analyze and extract insights from massive datasets, companies can make data-driven decisions that drive innovation, improve operational efficiency, and enhance customer experiences.

Role of Predictive Analytics in Business Decision-Making

Predictive analytics plays a crucial role in business decision-making processes by using historical data, statistical algorithms, and machine learning techniques to forecast future outcomes. This enables businesses to anticipate trends, identify opportunities, mitigate risks, and optimize strategic planning.

- By analyzing customer behavior patterns and preferences, businesses can personalize marketing campaigns, tailor product offerings, and enhance customer engagement.

- Predictive analytics can also optimize supply chain management by forecasting demand, improving inventory management, and reducing operational costs.

- Businesses can use predictive models to identify potential fraud, detect anomalies, and enhance cybersecurity measures to protect sensitive data.

Case Studies of Companies Integrating AI into Big Data Processing

Several companies across various industries have successfully integrated AI into their big data processing workflows to drive business growth and innovation.

One notable example is Netflix, which uses AI-driven algorithms to analyze viewer preferences and behavior, recommend personalized content, and optimize its streaming platform for a seamless user experience.

Amazon leverages AI-powered predictive analytics to forecast customer demand, optimize product recommendations, and streamline its logistics operations for faster delivery and enhanced customer satisfaction.

Google utilizes AI and machine learning to improve search algorithms, enhance ad targeting, and personalize user experiences across its suite of products and services.

In conclusion, the fusion of Big Data processing with AI is not just a trend but a strategic imperative for organizations looking to stay ahead in a data-driven landscape. By harnessing the power of AI to process and analyze Big Data, businesses can unlock valuable insights, drive innovation, and gain a competitive edge in today’s digital age.

When it comes to data storage and retrieval, columnar databases have gained popularity for their efficiency in handling analytical queries. By storing data in columns rather than rows, these databases can quickly process and aggregate large volumes of data, making them ideal for business intelligence and data warehousing applications. Learn more about the benefits of columnar databases and how they can enhance your data analytics capabilities.

Apache Hadoop processing is a key component in big data analytics, allowing organizations to store, process, and analyze massive datasets across distributed computing clusters. This open-source framework provides a scalable and cost-effective solution for handling varied data types and formats. Discover how Apache Hadoop processing can empower your data-driven decision-making processes and drive business growth.

With the increasing adoption of cloud technology, cloud-native data management has become essential for organizations looking to optimize their data infrastructure. This approach emphasizes the use of cloud services and technologies to streamline data storage, processing, and analysis. Explore the advantages of cloud-native data management and how it can revolutionize your data management practices in the digital age.