Big Data storage architecture encompasses various components and strategies that play a crucial role in handling vast amounts of data efficiently. Dive into the intricate world of data storage and management with a focus on scalability, redundancy, and optimization.

Explore the nuances of distributed file systems, data replication, scalability, and data partitioning to grasp the complexities and advantages of modern data storage solutions.

Overview of Big Data Storage Architecture

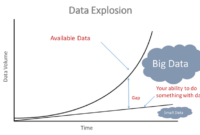

Big Data storage architecture refers to the design and structure of storage systems specifically tailored to handle large volumes of data. With the exponential growth of data in today’s digital age, traditional storage solutions are often insufficient to manage the vast amounts of information generated by businesses and organizations.

When it comes to cloud data storage , businesses are constantly seeking secure and reliable solutions to store their valuable information. Data lakes have emerged as popular data lake solutions that offer scalability and flexibility for managing large volumes of data. On the other hand, companies looking for efficient Cassandra data management solutions can benefit from the powerful capabilities of this NoSQL database.

Key Components of Big Data Storage Architecture

- Data Lakes: Data lakes are centralized repositories that allow for the storage of structured, semi-structured, and unstructured data at scale. They enable organizations to store vast amounts of data in its raw form for future analysis.

- Distributed File Systems: Distributed file systems like Hadoop Distributed File System (HDFS) are designed to store and manage large files across multiple nodes in a cluster. This allows for parallel processing and improved fault tolerance.

- NoSQL Databases: NoSQL databases such as MongoDB, Cassandra, and Couchbase are used in Big Data storage architecture to handle unstructured data efficiently. They provide flexibility and scalability to manage diverse data types.

- Data Warehouses: Data warehouses are used for storing and analyzing structured data from various sources. They are optimized for complex queries and data analysis, making them essential components of Big Data storage architecture.

Comparison with Traditional Data Storage

Traditional data storage systems rely on relational databases and file systems that may struggle to handle the volume, variety, and velocity of data in Big Data environments. Unlike traditional storage, Big Data storage architecture emphasizes scalability, flexibility, and the ability to process data in real-time to derive valuable insights.

When it comes to cloud data storage , businesses are increasingly turning to scalable and secure solutions to manage their ever-growing data. With the ability to store vast amounts of information in a centralized location, cloud storage offers flexibility and accessibility for users.

Distributed File Systems

Distributed file systems play a crucial role in Big Data storage architecture by allowing data to be stored across multiple nodes in a network, enabling scalability and fault tolerance.

Examples of Popular Distributed File Systems

- Hadoop Distributed File System (HDFS): Developed by Apache, HDFS is widely used in Big Data storage due to its scalability and reliability.

- Google File System (GFS): Initially developed by Google, GFS is designed to handle large amounts of data across distributed infrastructure.

- Amazon Simple Storage Service (S3): While not a traditional file system, S3 provides scalable object storage that is commonly used in Big Data applications.

Advantages and Challenges of Implementing Distributed File Systems

Implementing distributed file systems offers several advantages, including:

- Scalability: Distributed file systems can easily scale out by adding more nodes to the network.

- Fault Tolerance: Data redundancy and replication in distributed file systems help ensure data availability in case of node failures.

- High Performance: By distributing data across multiple nodes, distributed file systems can provide faster access to data.

However, there are also challenges associated with implementing distributed file systems:

- Complexity: Managing a distributed file system can be complex and requires specialized knowledge and expertise.

- Consistency: Ensuring data consistency across all nodes in a distributed file system can be challenging, especially in large-scale deployments.

- Network Overhead: The communication overhead between nodes in a distributed file system can impact performance, especially in geographically dispersed environments.

Data Replication and Backup Strategies

Data replication plays a crucial role in Big Data storage architecture by ensuring data availability, reliability, and fault tolerance. By creating multiple copies of data across different nodes or servers, data replication helps prevent data loss and enables quick access to information when needed.

Different Backup Strategies, Big Data storage architecture

- Full Backup: A complete backup of all data is taken at regular intervals. While this ensures comprehensive data protection, it can be time-consuming and resource-intensive.

- Incremental Backup: Only changes made since the last backup are saved, reducing storage space and backup time. However, restoring data may require multiple incremental backups.

- Differential Backup: Similar to incremental backup, but it saves all changes since the last full backup. This makes restoring data quicker compared to incremental backups.

- Mirror Backup: An exact copy of data is created in real-time on another storage device. This provides immediate access to data in case of primary storage failure.

Importance of Data Redundancy

Data redundancy, achieved through data replication and backup strategies, is essential for ensuring data durability and availability. In the event of hardware failures, natural disasters, or cyber attacks, having redundant copies of data ensures continuity of operations and minimizes the risk of data loss. By maintaining multiple copies of data, organizations can safeguard against unexpected events and maintain business continuity.

Scalability and Elasticity

Scalability and elasticity are crucial concepts in the context of Big Data storage architecture. Scalability refers to the ability of a system to handle an increasing amount of data or workload by adding more resources to the existing system. On the other hand, elasticity focuses on the dynamic allocation and deallocation of resources based on the current workload, ensuring optimal resource utilization.

Techniques for Achieving Scalability

- Horizontal scaling: Also known as scaling out, this technique involves adding more nodes to the system to distribute the workload and increase storage capacity.

- Partitioning: Dividing the data into smaller partitions allows for parallel processing and efficient data retrieval, enhancing scalability.

- Data replication: Replicating data across multiple nodes ensures redundancy and fault tolerance, contributing to scalability by distributing the load.

Elasticity for Efficient Resource Allocation

- Auto-scaling: Automated processes monitor the system’s performance and adjust the resources dynamically to meet the current demand, optimizing resource allocation.

- Resource pooling: By pooling resources together and allocating them based on the workload, elasticity enables efficient utilization of resources, preventing over-provisioning or underutilization.

- On-demand provisioning: Resources are provisioned as needed, allowing for flexibility and cost-effectiveness in managing Big Data storage systems.

Data Partitioning and Sharding

Data partitioning and sharding are essential techniques in Big Data storage architecture that involve breaking down large datasets into smaller, more manageable parts for improved performance and scalability.

Benefits of Data Partitioning

- Enhanced Performance: By distributing data across multiple nodes or servers, data partitioning reduces the load on individual machines, resulting in faster query processing and data retrieval.

- Scalability: Data partitioning allows for easy horizontal scaling as new nodes can be added to the system without affecting the overall performance, ensuring seamless growth as data volumes increase.

- Improved Fault Tolerance: In case of hardware failures or network issues, data partitioning ensures that data replicas are available across multiple nodes, reducing the risk of data loss or downtime.

Examples of Use Cases

- E-commerce Platforms: Online retailers often use data partitioning to manage large volumes of customer transactions, product data, and inventory information efficiently.

- Telecommunications Industry: Telecom companies utilize data partitioning to handle massive amounts of call records, network data, and customer information for improved performance and reliability.

- Healthcare Sector: Healthcare providers leverage data partitioning to store and analyze patient records, medical imaging data, and research findings securely while ensuring quick access to critical information.

In conclusion, the realm of Big Data storage architecture is multifaceted, offering a blend of innovative technologies and strategic approaches to meet the evolving demands of data management. Embrace the power of scalable and elastic storage systems to unlock the full potential of your data-driven endeavors.

For companies looking for efficient data management, data lake solutions provide a comprehensive approach to storing and analyzing data. By consolidating information from multiple sources into a single repository, organizations can gain valuable insights and make informed decisions.

When it comes to Cassandra data management , businesses benefit from a robust and scalable database system that can handle large volumes of data with ease. With its distributed architecture, Cassandra ensures high availability and fault tolerance for critical applications.