With Data processing frameworks at the forefront, discover how businesses tackle massive datasets with ease using cutting-edge technologies and real-time capabilities. Explore the world of data processing frameworks and unleash the power of efficient data handling.

Overview of Data Processing Frameworks

Data processing frameworks are software tools or platforms designed to efficiently handle and process large volumes of data. These frameworks provide a structured approach to organizing, manipulating, and analyzing datasets, making it easier for organizations to extract valuable insights from their data in a timely manner.

Importance of Data Processing Frameworks

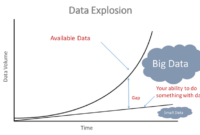

Data processing frameworks play a crucial role in handling large datasets efficiently by offering features such as parallel processing, fault tolerance, and scalability. These frameworks enable organizations to process massive amounts of data in a distributed computing environment, allowing for faster data processing and analysis compared to traditional methods. By leveraging data processing frameworks, businesses can improve decision-making, enhance operational efficiency, and gain a competitive edge in today’s data-driven world.

Popular Data Processing Frameworks

- Apache Hadoop: Apache Hadoop is one of the most widely used data processing frameworks in the industry. It is an open-source framework that enables distributed processing of large datasets across clusters of computers using simple programming models.

- Apache Spark: Apache Spark is another popular data processing framework known for its speed and ease of use. It provides in-memory data processing capabilities, making it ideal for iterative algorithms and interactive data analysis.

- Apache Flink: Apache Flink is a powerful stream processing framework that offers low latency and high throughput for real-time data processing. It supports event-driven applications and complex event processing, making it suitable for applications requiring real-time analytics.

Batch vs. Stream Processing

Batch processing and stream processing are two different approaches to processing data in data processing frameworks.

Advantages and Disadvantages of Batch Processing

Batch processing involves processing data in large volumes at scheduled intervals. One of the main advantages of batch processing is that it is efficient for processing large amounts of data that don’t require real-time processing. This approach allows for processing complex algorithms and handling large datasets without the need for immediate results. However, batch processing can lead to delays in data processing as it requires waiting for a batch of data to accumulate before processing.

Real-time Capabilities of Stream Processing

Stream processing, on the other hand, involves processing data in real-time as it arrives. This allows for immediate processing and analysis of data streams, enabling quick decision-making based on up-to-date information. Stream processing is ideal for applications that require real-time insights and actions, such as fraud detection, monitoring systems, and IoT devices. The real-time capabilities of stream processing enhance the agility and responsiveness of data processing systems, enabling businesses to react swiftly to changing data patterns and trends.

Parallel Processing in Data Frameworks

Parallel processing within data frameworks involves breaking down tasks into smaller chunks and executing them simultaneously across multiple processors or cores. This approach allows for faster data processing, improved performance, and enhanced scalability.

Improved Performance and Scalability

- Parallel processing enhances performance by reducing the overall processing time. By dividing tasks among multiple processors, data can be processed more efficiently, leading to quicker results.

- Scalability is also improved as additional processors can be added to handle increased workloads without sacrificing performance. This flexibility allows data processing frameworks to adapt to changing demands seamlessly.

Examples of Data Processing Frameworks

- Apache Spark: Apache Spark is a popular data processing framework that leverages parallel processing through its resilient distributed datasets (RDDs) and directed acyclic graph (DAG) execution engine. It allows for in-memory processing and supports parallel operations for tasks like map-reduce, machine learning, and graph processing.

- Apache Flink: Apache Flink is another framework known for its efficient stream processing capabilities. It offers support for parallel data processing through data streaming and batch processing modes. Flink’s distributed architecture enables parallel execution of tasks across multiple nodes, ensuring high performance and fault tolerance.

Data Processing Framework Architecture

Data processing frameworks typically consist of several key components that work together to ingest, process, and output data efficiently. These components play crucial roles in ensuring fault tolerance and reliability within the architecture.

Architecture Components of Data Processing Frameworks

- Data Ingestion: This component is responsible for collecting data from various sources and transferring it into the processing framework. It involves handling data from different formats and structures, ensuring smooth data flow.

- Data Processing: Once the data is ingested, this component processes the data according to the defined logic or algorithms. It involves tasks such as filtering, transforming, aggregating, and analyzing the data to derive meaningful insights.

- Data Output: After processing, the results are sent to the output component for storage, visualization, or further analysis. This component ensures that the processed data is delivered to the intended destination efficiently.

Role of Data Ingestion, Processing, and Output

- Data ingestion ensures that data from various sources is collected and brought into the processing framework for further analysis.

- Data processing component executes the defined logic on the ingested data to derive insights and valuable information.

- Data output component delivers the processed data to the desired destination, such as a database, data warehouse, or visualization tool, for consumption.

Fault Tolerance and Reliability in Data Processing Framework Architecture

- One way fault tolerance is achieved is through data replication, where copies of data are stored on multiple nodes to ensure data availability in case of failures.

- Reliability is maintained through mechanisms like checkpointing, which saves the state of the processing job at regular intervals to recover from failures without reprocessing the entire dataset.

- Data processing frameworks also use task resubmission and job monitoring to handle failures and ensure the reliability of data processing tasks.

In conclusion, Data processing frameworks revolutionize data management by enhancing performance, scalability, and fault tolerance. Embrace the future of data processing and stay ahead in the digital age.

When it comes to MongoDB storage , it offers a flexible and scalable solution for handling large volumes of data. With its document-oriented structure, MongoDB provides efficient storage and retrieval capabilities for modern applications.

For businesses looking for high availability data storage , solutions like clustering and replication can ensure continuous access to critical information. Implementing redundant systems can minimize downtime and enhance data reliability.

When dealing with data analytics, Columnar databases are gaining popularity due to their efficient storage of columns rather than rows. This design allows for faster query performance and better data compression for analytical workloads.