HBase storage solution sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail with ahrefs author style and brimming with originality from the outset.

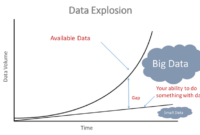

HBase storage solution is a robust framework designed to tackle the storage needs of big data environments, revolutionizing the way data is managed and utilized across various industries.

Overview of HBase Storage Solution

HBase is an open-source, distributed, non-relational database that provides real-time read/write access to large datasets. It is designed to handle massive amounts of data stored in a distributed environment, making it ideal for big data applications.

Utilization of HBase in Big Data Environments

In big data environments, HBase is utilized as a scalable, fault-tolerant storage solution that can handle petabytes of data across thousands of commodity servers. It is commonly used in conjunction with Apache Hadoop, as it integrates seamlessly with the Hadoop ecosystem, providing a reliable storage layer for Hadoop applications.

- Allows for fast data retrieval and processing

- Supports high availability and automatic sharding

- Provides linear and modular scalability

Benefits of Using HBase as a Storage Solution

HBase offers several key benefits when used as a storage solution in big data environments. These include:

Scalability: HBase can easily scale to accommodate growing data volumes without compromising performance.

High Availability: HBase is designed to ensure data availability even in the event of server failures.

Real-time Processing: HBase allows for real-time data processing, making it suitable for applications requiring low latency.

Industries and Use Cases for HBase Storage Solution

HBase is commonly employed in industries and use cases where real-time data processing and scalability are essential. Some examples include:

- Financial Services: HBase is used for fraud detection, risk analysis, and real-time transaction processing.

- Telecommunications: HBase is utilized for call detail record (CDR) analysis, network optimization, and customer churn prediction.

- Online Retail: HBase is employed for personalized product recommendations, inventory management, and customer behavior analysis.

Architecture of HBase Storage Solution

HBase is designed with a unique architecture that allows for scalable and distributed storage of large volumes of data. Let’s delve into the details of how HBase manages data storage and processing.

HBase Master and Region Servers

In the architecture of HBase, the HBase Master server acts as a coordinator that manages the cluster. It is responsible for assigning regions to Region Servers, monitoring their status, and handling load balancing. On the other hand, Region Servers are responsible for serving data to clients and executing read and write operations on the data stored in the regions.

Data Storage and Management

Data in HBase is stored in tables that are divided into regions, which are distributed across Region Servers. Each region corresponds to a range of rows and is responsible for storing and managing the data within that range. HBase uses a distributed file system like HDFS to store data, ensuring fault tolerance and high availability.

Scalability Features

One of the key features of HBase is its scalability. As data grows, HBase can easily scale horizontally by adding more Region Servers to the cluster. This allows HBase to handle massive amounts of data and high read and write throughput efficiently. Additionally, HBase supports automatic sharding, which helps distribute data evenly across servers and ensures balanced workloads.

Data Modeling in HBase: HBase Storage Solution

Data modeling in HBase involves structuring and organizing data in a way that is optimized for the distributed nature of the storage solution. Unlike traditional RDBMS systems, HBase follows a column-oriented data model that allows for flexible schema design and efficient data retrieval.

Column Families and Column Qualifiers

In HBase, data is organized into column families, which are groups of columns that are stored together on disk. Each column family can contain multiple column qualifiers, which further define the individual columns within the family. This hierarchical structure allows for efficient storage and retrieval of data based on column families and qualifiers.

- Column Families: Column families serve as a way to logically group related data together. For example, in a table storing information about users, you could have a column family for personal details and another for account information.

- Column Qualifiers: Column qualifiers are used to uniquely identify individual columns within a column family. For instance, within the personal details column family, you could have column qualifiers for name, age, and address.

Data Structure in HBase Tables

In HBase tables, data is stored in rows that are uniquely identified by a row key. Each row can contain multiple column families, and each column family can have multiple column qualifiers. This allows for a flexible schema design where columns can be added dynamically without affecting existing data.

- Example: Consider a table storing information about products. Each row represents a unique product identified by a product ID. The columns could include details such as product name, price, and category, organized into different column families like basic info and pricing.

Comparison with RDBMS Systems

When comparing data modeling in HBase with traditional RDBMS systems, one key difference is the schema flexibility. In HBase, schema changes can be made on the fly without requiring alterations to existing data, making it easier to adapt to evolving data requirements. On the other hand, RDBMS systems have a rigid schema that needs to be defined upfront, making it more challenging to accommodate changes.

- Another difference is the storage mechanism. HBase stores data in a column-oriented fashion, which is more suitable for analytical queries that involve scanning large datasets. In contrast, RDBMS systems store data in row-oriented tables, which are better suited for transactional queries that involve retrieving specific records.

Performance and Optimization in HBase Storage

Performance optimization plays a crucial role in ensuring that HBase storage operates efficiently and effectively. By implementing various strategies and understanding key concepts such as data locality and region splits, organizations can enhance the overall performance of their HBase storage solution.

Importance of Data Locality and Region Splits

Data locality refers to the concept of storing data physically close to where it will be processed, reducing network latency and improving overall performance. In HBase, data locality is achieved through region splits, which divide tables into smaller regions that can be distributed across multiple nodes in a cluster. By ensuring that related data is stored together in the same region, HBase can minimize the amount of data transferred over the network, resulting in faster access times and improved performance.

Impact of Compactions and Caching Mechanisms

Compactions in HBase are processes that merge smaller HFiles into larger ones, reducing the number of files read during data retrieval and improving read performance. By regularly running compactions, organizations can optimize storage utilization and enhance overall system performance. Additionally, caching mechanisms such as BlockCache and MemStore can help reduce read latency by storing frequently accessed data in memory, reducing the need to fetch data from disk.

Best Practices for Tuning HBase Storage Solution

– Monitor and adjust region size: By optimizing region size based on data access patterns and workload requirements, organizations can improve data locality and reduce the likelihood of hotspots.

– Enable compression: Utilize compression algorithms such as Snappy or LZO to reduce storage space and improve read and write performance.

– Tune compaction and flush settings: Adjust compaction and flush settings based on workload characteristics to achieve optimal performance and resource utilization.

– Utilize Bloom filters: Enable Bloom filters to reduce disk reads and improve query performance by filtering out unnecessary data.

– Monitor and optimize JVM settings: Regularly monitor and adjust Java Virtual Machine (JVM) settings to ensure efficient memory utilization and garbage collection.

By implementing these best practices and understanding the importance of data locality, region splits, compactions, and caching mechanisms, organizations can optimize their HBase storage solution for better efficiency and performance.

In conclusion, the HBase storage solution stands as a beacon of efficiency and scalability, providing organizations with a powerful tool to navigate the complexities of modern data storage challenges. Dive into the realm of HBase storage and unlock a world of possibilities for your data-driven endeavors.

When it comes to database management, MongoDB storage is a popular choice for its flexibility and scalability. Organizations can easily store and retrieve large volumes of data with MongoDB, making it ideal for Big Data projects. For more robust storage solutions, companies often turn to Big Data storage solutions that offer advanced features for handling massive amounts of information efficiently.

Additionally, the Hadoop Distributed File System (HDFS) is widely used for storing and managing Big Data across distributed computing environments.

When it comes to MongoDB storage, businesses rely on its flexible document model and scalability. MongoDB’s storage engine efficiently manages data storage and retrieval, making it ideal for handling large volumes of data. With features like sharding and replication, MongoDB ensures high availability and performance for diverse applications. Learn more about MongoDB storage to optimize your data management strategy.

Looking for Big Data storage solutions? Look no further than advanced technologies like Hadoop and Spark. These platforms offer distributed storage solutions that can handle massive datasets with ease. By leveraging parallel processing and fault tolerance, businesses can efficiently store and analyze data at scale. Discover the benefits of Big Data storage solutions for your organization’s data needs.

Understanding the Hadoop Distributed File System (HDFS) is crucial for managing Big Data effectively. HDFS divides large files into smaller blocks for distributed storage across a cluster of servers. This distributed storage architecture enables reliable data processing and analysis for Big Data applications. Explore the key features and benefits of Hadoop Distributed File System (HDFS) to harness the power of Big Data storage.