High availability data storage is crucial in today’s IT landscape, ensuring seamless access to critical information. Let’s delve into the world of high availability data storage systems and how they shape the digital realm.

From RAID to clustering, the realm of high availability data storage is vast and varied, offering a myriad of solutions to meet diverse needs.

What is High Availability Data Storage?

![]()

High availability data storage refers to a system or solution that ensures data is always accessible and operational, even in the event of hardware failures, natural disasters, or other disruptions. This means that data is consistently available for users without any downtime, making it a crucial component of modern IT infrastructures.

Importance of High Availability Data Storage

High availability data storage is essential for businesses and organizations that rely heavily on continuous access to their data. Industries such as finance, healthcare, e-commerce, and telecommunications require high availability data storage solutions to ensure that critical information is always accessible and protected.

Examples of Industries Relying on High Availability Data Storage

- Finance: Banks and financial institutions need high availability data storage to ensure continuous access to customer information, transactions, and sensitive financial data.

- Healthcare: Hospitals and healthcare providers rely on high availability data storage to access patient records, medical imaging, and other critical data in real-time.

- E-commerce: Online retailers need high availability data storage to support their websites, manage inventory, process orders, and ensure a seamless shopping experience for customers.

- Telecommunications: Telecom companies require high availability data storage to manage network operations, customer data, and communication services without interruptions.

Types of High Availability Data Storage Systems

High availability data storage systems come in various types, each offering unique features and benefits to ensure data reliability and accessibility. Let’s explore some of the most common types:

RAID (Redundant Array of Independent Disks)

RAID is a data storage technology that combines multiple disk drives into a single unit to improve performance and data redundancy. There are several RAID levels, such as RAID 0, RAID 1, RAID 5, and RAID 10, each offering different advantages and disadvantages. RAID systems are suitable for businesses that require a balance between performance, redundancy, and cost-effectiveness.

Clustering

Clustering involves connecting multiple servers together to work as a single system, providing high availability and load balancing. In a clustered environment, if one server fails, another server can take over seamlessly to ensure continuous access to data. Clustering is commonly used in large-scale enterprises and data centers where downtime is not an option.

Replication

Replication involves creating and maintaining copies of data across multiple storage devices or locations. By replicating data, organizations can ensure data availability in the event of hardware failures or disasters. Replication is commonly used in disaster recovery scenarios and for ensuring data consistency across different geographical locations.

Each type of high availability data storage system has its own set of advantages and disadvantages. RAID provides a cost-effective solution with improved performance and redundancy but may not offer the same level of fault tolerance as clustering or replication. Clustering offers seamless failover capabilities but can be complex to set up and maintain. Replication ensures data availability and disaster recovery but may require significant storage resources and bandwidth.

In conclusion, the choice of high availability data storage system depends on the specific needs and priorities of the organization. For instance, a financial institution may opt for clustering to ensure uninterrupted access to critical data, while a small business may find RAID more cost-effective for basic redundancy needs.

Factors Influencing High Availability Data Storage Design

High availability data storage design is influenced by several key factors that play a crucial role in ensuring the reliability and performance of the system. These factors include scalability, redundancy, fault tolerance, data replication, and disaster recovery strategies.

Scalability

Scalability is a critical factor in high availability data storage design as it determines the system’s ability to handle an increasing amount of data and user requests without compromising performance. By incorporating scalable architecture, organizations can easily expand their storage capacity as needed, ensuring that the system remains highly available even as data volumes grow. Scalability also allows for seamless integration of new hardware or software components to meet changing business requirements.

Redundancy

Redundancy is another key factor that influences the design of high availability data storage systems. By implementing redundant components such as storage drives, power supplies, and network connections, organizations can minimize the risk of system failures and ensure continuous data availability. Redundancy helps in maintaining system uptime by providing backup resources that can quickly take over in case of a hardware or software failure. Additionally, redundant data storage systems can withstand multiple points of failure, enhancing overall reliability.

Fault Tolerance

Fault tolerance is a crucial aspect of high availability data storage design, as it determines the system’s ability to continue operating in the event of hardware or software failures. By incorporating fault-tolerant mechanisms such as RAID (Redundant Array of Independent Disks) and mirroring, organizations can ensure that data remains accessible even if one or more components fail. Fault-tolerant systems are designed to detect and recover from errors automatically, minimizing downtime and ensuring continuous data availability.

Data Replication, High availability data storage

Data replication is another factor that influences high availability data storage design by ensuring that data is duplicated across multiple storage devices or locations. By replicating data in real-time or near-real-time, organizations can mitigate the risk of data loss and ensure high availability in case of hardware failures or disasters. Data replication strategies such as synchronous and asynchronous replication play a crucial role in maintaining data consistency and availability across distributed storage systems.

Disaster Recovery Strategies

Incorporating robust disaster recovery strategies is essential in high availability data storage design to ensure business continuity in the face of catastrophic events such as natural disasters, cyberattacks, or system failures. By implementing offsite backups, data archiving, and failover mechanisms, organizations can recover critical data and services quickly in the event of a disaster. Disaster recovery strategies help in minimizing data loss and downtime, ensuring that the system remains highly available even in challenging circumstances.

Best Practices for Implementing High Availability Data Storage

Implementing high availability data storage systems requires careful planning and execution to ensure seamless operations and data accessibility. Let’s explore some best practices for setting up and configuring these systems, the role of data backup and disaster recovery strategies, and tips for monitoring and maintaining their performance.

Setting Up High Availability Data Storage Systems

- Utilize redundant hardware components to minimize single points of failure.

- Implement clustering and load balancing techniques to distribute workload efficiently.

- Configure automatic failover mechanisms to ensure continuous operation in case of hardware or software failures.

- Regularly test failover procedures to validate system resilience.

Role of Data Backup and Disaster Recovery

- Implement regular backup schedules to ensure data integrity and availability.

- Utilize offsite backups to protect against site-wide disasters.

- Create disaster recovery plans outlining procedures for data restoration in case of catastrophic events.

- Test backup and recovery processes periodically to validate their effectiveness.

Monitoring and Maintaining Performance

- Utilize monitoring tools to track system performance and detect anomalies.

- Set up alerts for critical thresholds to proactively address potential issues.

- Regularly review system logs to identify trends and areas for optimization.

- Perform regular maintenance tasks such as software updates and hardware checks to ensure optimal performance.

In conclusion, high availability data storage systems are the backbone of modern IT operations, providing reliability and efficiency in an ever-evolving digital environment.

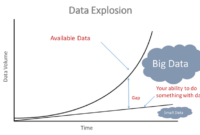

When it comes to big data storage, one of the most popular choices is the Hadoop Distributed File System (HDFS). This system is designed to handle large amounts of data across multiple servers, making it ideal for storing and processing big data. Organizations looking to manage vast amounts of data efficiently often turn to HDFS for its scalability and reliability.

For those in need of a powerful and flexible database management system, Cassandra data management is a top choice. Cassandra is known for its ability to handle large amounts of data spread out across multiple servers with high availability and fault tolerance. It is a popular option for organizations looking to manage big data with ease.

When it comes to cloud storage solutions, Google Cloud Storage stands out as a reliable and secure option. With Google’s infrastructure backing it up, users can store and access their data with ease, knowing that it is protected and easily accessible. Google Cloud Storage is a popular choice for businesses looking for a scalable and cost-effective storage solution.