Parallel data processing revolutionizes the way data is handled, offering unparalleled efficiency and speed in various industries. From its fundamental concepts to practical applications, this overview delves into the intricacies of this cutting-edge technology.

Explore the different types of parallel data processing systems, architectures, algorithms, and frameworks that drive innovation and enhance performance in data processing tasks.

What is Parallel Data Processing?

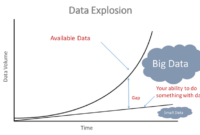

Parallel data processing refers to a method of computing where large amounts of data are processed simultaneously by multiple processors. Unlike serial processing, which handles data one piece at a time, parallel processing divides the data into smaller chunks and processes them concurrently. This approach significantly speeds up data processing tasks and enhances overall efficiency.

Advantages of Parallel Data Processing

- Increased Speed: By distributing the workload among multiple processors, parallel data processing can complete tasks much faster than serial processing.

- Scalability: Parallel processing systems can easily scale up by adding more processors, allowing for efficient handling of growing volumes of data.

- Fault Tolerance: If one processor fails, the overall system can continue functioning with the remaining processors, ensuring uninterrupted data processing.

Examples of Industries/Applications

- Big Data Analytics: Industries like finance, healthcare, and e-commerce use parallel data processing to analyze vast amounts of data in real-time for insights and decision-making.

- Scientific Research: Parallel processing is vital in fields like weather forecasting, genomics, and particle physics where complex calculations require massive data processing capabilities.

- Computer Graphics: Rendering high-quality graphics and animations in industries such as entertainment and gaming heavily rely on parallel processing for quick and efficient rendering.

Types of Parallel Data Processing Systems

Parallel data processing systems can be categorized into two main types: shared memory systems and distributed memory systems. Each type comes with its own set of advantages and disadvantages, making them suitable for different use cases.

Designing a solid architecture for big data storage is crucial for handling massive volumes of data efficiently. By understanding the principles of Big Data storage architecture , businesses can create scalable and reliable storage systems that support their data processing needs.

Shared Memory Systems

Shared memory systems involve multiple processors accessing a common memory space. This allows processors to share data easily and communicate efficiently. One of the key advantages of shared memory systems is their simplicity in programming, as all processors can access the same memory locations directly. However, one of the main disadvantages is the potential for contention and bottlenecks when multiple processors try to access the memory simultaneously.

When it comes to stream processing solutions, businesses need to stay ahead of the game in processing real-time data efficiently. Utilizing tools like stream processing solutions can help organizations handle data streams effectively, leading to faster decision-making and improved operational performance.

Distributed Memory Systems

In contrast, distributed memory systems consist of multiple processors with their own local memory. Processors communicate with each other through message passing, making them more scalable and flexible. Distributed memory systems are well-suited for handling large amounts of data and can easily expand by adding more nodes. However, programming for distributed memory systems can be more complex compared to shared memory systems due to the need for explicit message passing.

Managing data in Cassandra requires a robust strategy to ensure scalability and reliability. Organizations can benefit from implementing best practices in Cassandra data management to optimize performance and maintain data integrity in distributed environments.

Parallel Data Processing Architectures

Parallel data processing architectures play a crucial role in optimizing data processing tasks by utilizing multiple processing units simultaneously. Let’s explore some of the common architectures used in parallel data processing.

SIMD (Single Instruction, Multiple Data) Architecture

SIMD architecture is designed to execute the same instruction across multiple data points simultaneously. This architecture is well-suited for tasks that involve processing large amounts of data in parallel. By applying the same operation to multiple data elements at once, SIMD architecture can significantly improve processing speed and efficiency.

- Each processing unit in a SIMD architecture receives the same instruction but operates on different data elements.

- Common applications of SIMD architecture include multimedia processing, image processing, and scientific computing.

- Vector processors and GPUs are examples of hardware that often utilize SIMD architecture to accelerate data processing tasks.

MIMD (Multiple Instruction, Multiple Data) Architecture vs. SIMD

MIMD architecture, on the other hand, allows multiple processing units to execute different instructions on different data sets independently. Unlike SIMD, where the same instruction is applied to multiple data elements, MIMD architecture provides more flexibility in handling diverse tasks and data sets simultaneously.

- In MIMD architecture, each processing unit can execute a unique instruction on its own set of data, enabling parallel processing of diverse tasks.

- This architecture is suitable for applications that require different processing steps to be carried out independently and asynchronously.

- Distributed computing systems and clusters often employ MIMD architecture to handle complex and varied computational tasks effectively.

Parallel Data Processing Algorithms

Parallel data processing algorithms play a crucial role in improving the efficiency and performance of data processing systems by dividing tasks into smaller sub-tasks that can be processed simultaneously. Let’s explore some examples of algorithms used in parallel data processing, how they enhance efficiency, and the challenges associated with designing them.

Examples of Parallel Data Processing Algorithms

- MapReduce: This algorithm is commonly used for processing large datasets in parallel by splitting them into smaller chunks, processing them independently, and then aggregating the results.

- Parallel Sort: Algorithms like parallel merge sort or quicksort divide the sorting process into parallel tasks, significantly reducing the time required to sort large datasets.

- K-means Clustering: This algorithm partitions data points into clusters in parallel, making it faster and more efficient for data clustering tasks.

Efficiency and Performance Improvement

Parallel algorithms improve efficiency and performance by distributing the workload across multiple processing units, allowing tasks to be completed faster than with sequential processing. By leveraging parallelism, data processing systems can handle large volumes of data more effectively and reduce processing time significantly.

Challenges in Designing Parallel Algorithms

- Communication Overhead: Coordinating tasks and sharing data between parallel processing units can introduce communication overhead, impacting overall performance.

- Data Dependency: Managing dependencies between tasks and ensuring proper synchronization can be challenging in parallel algorithms, leading to potential bottlenecks.

- Scalability: Designing algorithms that scale efficiently with an increasing number of processing units is crucial but can be complex and require careful consideration.

Parallel Data Processing Frameworks

Apache Hadoop, Spark, and Flink are among the most popular frameworks for parallel data processing. These frameworks offer various features and capabilities that make them suitable for processing large-scale data efficiently.

Apache Hadoop

Apache Hadoop is a well-known open-source framework that allows for the distributed processing of large data sets across clusters of computers. It is based on the MapReduce programming model and is designed to handle big data applications effectively. Hadoop also includes the Hadoop Distributed File System (HDFS) for storage and Apache YARN for resource management.

Apache Spark

Apache Spark is another widely used framework that provides in-memory data processing capabilities, making it faster than Hadoop for certain use cases. Spark supports various programming languages, including Java, Scala, and Python, and offers libraries for machine learning, graph processing, and stream processing. It also provides resilient distributed datasets (RDDs) for fault-tolerant parallel processing.

Apache Flink

Apache Flink is a powerful framework for real-time stream processing and batch processing. It supports event time processing, exactly-once semantics, and stateful computations, making it suitable for complex data processing tasks. Flink offers high throughput and low latency, making it ideal for applications that require real-time data processing.

These frameworks play a crucial role in facilitating large-scale data processing tasks by providing scalable, fault-tolerant, and efficient processing capabilities. Organizations can choose the framework that best suits their specific requirements based on factors such as data volume, processing speed, and complexity of computations.

In conclusion, parallel data processing emerges as a pivotal force in the realm of data handling, shaping the future of industries and technology. With its diverse applications and continuous evolution, the possibilities for optimizing data processing efficiency are endless.