Starting with Apache Hadoop processing, this article dives into the world of big data analytics, exploring the key components and functionalities that make it a powerhouse in data processing.

Delving deeper into Apache Hadoop Distributed File System (HDFS), MapReduce, and Yet Another Resource Negotiator (YARN), this guide sheds light on the intricacies of parallel processing and resource management in the realm of big data.

Introduction to Apache Hadoop Processing

Apache Hadoop is an open-source framework designed for distributed storage and processing of large data sets across clusters of computers. It provides a cost-effective solution for dealing with big data by enabling parallel processing, fault tolerance, and scalability.

Components of Apache Hadoop

- Hadoop Distributed File System (HDFS): HDFS is a distributed file system that stores data across multiple machines, providing high throughput access to application data.

- MapReduce: MapReduce is a programming model for processing and generating large data sets in parallel across a distributed cluster.

- YARN (Yet Another Resource Negotiator): YARN is the resource management layer of Hadoop that enables different data processing engines to run on the same Hadoop cluster.

Importance of Parallel Processing in Big Data Analytics

Parallel processing plays a crucial role in big data analytics as it allows for the simultaneous execution of multiple tasks across distributed systems, leading to faster data processing and analysis. By breaking down tasks into smaller sub-tasks that can be executed in parallel, Apache Hadoop enables efficient processing of massive datasets, improving overall performance and scalability in data analytics applications.

Apache Hadoop Distributed File System (HDFS)

The Apache Hadoop Distributed File System (HDFS) is the primary storage system used by Hadoop applications. It is designed to store and manage large volumes of data across a distributed cluster of commodity hardware.

Architecture of HDFS

HDFS follows a master-slave architecture where the NameNode acts as the master and DataNodes act as slaves. The NameNode is responsible for managing the metadata of the file system, such as file names, permissions, and block locations. DataNodes store the actual data in blocks and communicate with the NameNode to report the status of the data they manage.

Data Storage and Replication in HDFS

Data in HDFS is stored in blocks, typically 128 MB or 256 MB in size, which are replicated across multiple DataNodes for fault tolerance. The default replication factor is 3, meaning each block is replicated three times. This replication ensures data durability and availability even in the case of hardware failures.

Advantages of Using HDFS for Large-Scale Data Storage

- Scalability: HDFS can scale horizontally by adding more DataNodes to the cluster, allowing it to store and process petabytes of data.

- Fault Tolerance: The replication of data across multiple nodes ensures that data is not lost in the event of hardware failures.

- High Throughput: HDFS is optimized for streaming access patterns, making it ideal for processing large files efficiently.

- Cost-Effective: HDFS runs on commodity hardware, making it a cost-effective solution for storing and processing big data.

MapReduce in Apache Hadoop

MapReduce is a programming model and processing framework used in Apache Hadoop for large-scale data processing. It enables parallel processing of data across a distributed cluster of computers, allowing for efficient and scalable processing of massive datasets.

Map and Reduce Phases, Apache Hadoop processing

The MapReduce process consists of two main phases: the Map phase and the Reduce phase. During the Map phase, input data is divided into smaller chunks and processed in parallel across multiple nodes in the cluster. Each node applies a specific function or operation to the data and generates key-value pairs as output.

In the Reduce phase, the intermediate key-value pairs generated in the Map phase are shuffled, sorted, and then combined based on their keys. The Reduce function is then applied to these grouped key-value pairs to produce the final output. This phase aggregates and summarizes the results obtained from the Map phase to derive meaningful insights from the data.

Parallel Data Processing

MapReduce enables parallel processing of data by distributing the workload across multiple nodes in a Hadoop cluster. This distributed approach allows for efficient utilization of resources and faster processing of large datasets. By dividing the data into smaller chunks and processing them in parallel, MapReduce significantly reduces the time required to analyze and derive insights from massive amounts of data. This parallel processing capability is essential for handling big data workloads and performing complex analytics tasks in a scalable and cost-effective manner.

Yet Another Resource Negotiator (YARN)

YARN, short for Yet Another Resource Negotiator, is a key component of Apache Hadoop that plays a crucial role in resource management within a Hadoop cluster. It serves as a resource manager that helps in efficient utilization of cluster resources and enables the processing of diverse workloads in a distributed computing environment.

Improved Cluster Utilization

YARN significantly improves cluster utilization in Hadoop by allowing for more efficient allocation of resources. Unlike the earlier versions of Hadoop that relied on MapReduce for resource management, YARN introduces a more flexible architecture that separates resource management from job scheduling. This separation enables multiple applications to share cluster resources more effectively, leading to better overall utilization.

- YARN dynamically allocates resources based on the needs of individual applications, optimizing resource utilization and enhancing performance.

- It allows for the concurrent execution of multiple workloads on the same cluster, ensuring that resources are utilized to their full capacity.

- YARN supports a variety of processing frameworks beyond MapReduce, such as Spark and Tez, enabling organizations to run different types of workloads on the same cluster.

Benefits for Running Diverse Workloads

YARN offers several benefits for running diverse workloads in Hadoop, making it a versatile and efficient resource management system for big data processing.

- By supporting multiple processing frameworks, YARN enables organizations to run a wide range of applications, from batch processing to real-time analytics, on the same cluster.

- It allows for better isolation and prioritization of resources, ensuring that critical workloads get the resources they need to meet their performance requirements.

- YARN’s scalability and fault tolerance capabilities make it well-suited for handling large-scale data processing tasks across multiple nodes in a Hadoop cluster.

Apache Hadoop Ecosystem

Apache Hadoop ecosystem consists of a variety of tools and frameworks that work together to enhance data processing capabilities and support comprehensive data analysis. These components play a crucial role in handling the various stages of big data processing efficiently.

Hive

Hive is a data warehousing tool built on top of Hadoop that provides a SQL-like interface to query and manage large datasets stored in Hadoop Distributed File System (HDFS). It allows users to write complex queries without the need for extensive programming knowledge, making it easier to analyze and process data.

Pig

Pig is a high-level scripting platform for analyzing large datasets in Hadoop. It enables users to write data transformation and analysis tasks using a simple scripting language called Pig Latin. Pig optimizes these tasks into MapReduce jobs, making it easier to process and manipulate data in a distributed computing environment.

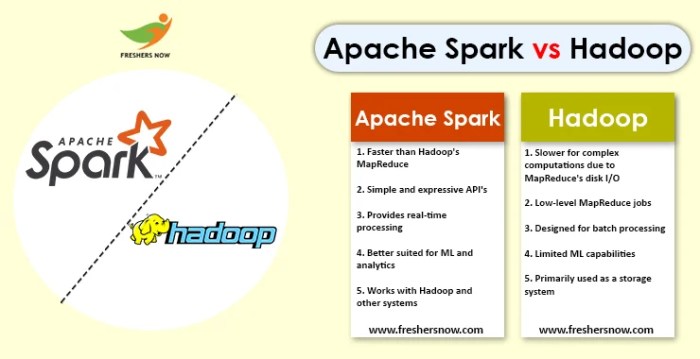

Spark

Spark is a fast and general-purpose cluster computing system that provides in-memory data processing capabilities. It offers a more flexible and efficient alternative to MapReduce for processing large datasets. Spark can be seamlessly integrated with Hadoop, allowing users to leverage its advanced analytics and machine learning libraries for complex data processing tasks.

Importance of Integration

Integrating different tools within the Apache Hadoop ecosystem is essential for achieving comprehensive data analysis. By combining tools like Hive, Pig, and Spark, organizations can leverage the strengths of each tool to handle various aspects of data processing. This integration allows for seamless data flow and processing across different stages of the data pipeline, enabling organizations to extract valuable insights and make informed decisions based on their data.

In conclusion, Apache Hadoop processing revolutionizes the way we handle and analyze massive amounts of data, offering scalability, efficiency, and flexibility in data processing. Unlock the potential of Apache Hadoop to take your data analytics to new heights.

When it comes to choosing a reliable storage solution for your big data needs, HBase storage solution is definitely worth considering. With its ability to handle massive amounts of data and provide fast access times, HBase is a popular choice among organizations looking to scale their data storage capabilities.

Implementing effective data partitioning techniques is crucial for optimizing performance and managing large datasets. By dividing data into smaller, more manageable chunks, organizations can improve query performance and ensure efficient data processing.

When it comes to ensuring data reliability and availability, implementing robust data replication strategies is essential. By creating copies of data across multiple nodes or data centers, organizations can minimize the risk of data loss and ensure uninterrupted access to critical data.